Stable Diffusion Review 2025: My 6-Month Experience with Free AI Art

Last updated: September 6, 2025

My Journey with Stable Diffusion: From Skeptic to Daily User

Six months ago, I was paying $60/month for Midjourney and wondering if there was a better way. Everyone kept talking about Stable Diffusion being “free” but “complicated to set up.” As someone who evaluates AI tools professionally, I decided to dive deep and give it a proper test.

After 6 months of daily use, thousands of generated images, and multiple hardware configurations, I can honestly say Stable Diffusion has revolutionized my creative workflow. But is it right for you? This comprehensive review shares my real experience, including the frustrations and breakthroughs.

TL;DR: Stable Diffusion is incredibly powerful and free, but requires technical setup and patience. If you’re willing to invest 2-3 hours learning, it can save you hundreds monthly while giving you unlimited creative freedom.

Why I Chose Stable Diffusion Over $60/Month Alternatives

The Cost Reality Check

Let me start with the brutal math that converted me:

- Midjourney: $60/month for unlimited generations

- DALL-E: $20/month + $15 per 115 additional images

- Adobe Firefly: $23/month for 1000 generative credits

- Stable Diffusion: $0/month + one-time hardware investment

In 6 months, I’ve saved over $360 while generating more images than ever before. But the savings weren’t the only reason I switched.

What Made Stable Diffusion Click for Me

1. Unlimited Creative Freedom

Unlike subscription services with content filters, Stable Diffusion lets me create anything. No more “this prompt violates our guidelines” messages interrupting my creative flow.

2. Complete Control Over Output

I can fine-tune every aspect:

- Custom models for specific art styles

- ControlNet for precise composition control

- Inpainting for perfect touch-ups

- Face restoration for portrait work

3. Privacy and Ownership

My images stay on my computer. No cloud storage concerns, no rights questions, no data mining of my creative ideas.

My Technical Setup: What Actually Works

Hardware Requirements (From My Testing)

Minimum Setup (What I Started With):

- NVIDIA RTX 3060 (8GB VRAM) - $300

- 16GB System RAM

- 50GB free storage

My Current Optimal Setup:

- NVIDIA RTX 4070 (12GB VRAM) - $600

- 32GB System RAM

- 500GB SSD for models and outputs

Performance Results:

- RTX 3060: 1024x1024 image in 45 seconds

- RTX 4070: 1024x1024 image in 15 seconds

- Multiple images in batches of 4-8

Installation: The Reality vs. Expectations

What I Expected: Hours of command-line frustration What Actually Happened: 30 minutes with Automatic1111 WebUI

Here’s exactly how I set it up:

- Download Automatic1111 WebUI - The most user-friendly interface

- Install Python 3.10 - Required dependency

- Download base models - Started with v1.5, now use SDXL

- Run the installer - Literally just double-click

run.bat

The hardest part was waiting for the 4GB model downloads, not technical complexity.

Real-World Performance: 6 Months of Data

Generation Speed Comparison

| Model Type | My RTX 3060 | My RTX 4070 | Midjourney | DALL-E |

|---|---|---|---|---|

| Standard (512x512) | 25 seconds | 8 seconds | 60 seconds | 20 seconds |

| High-res (1024x1024) | 45 seconds | 15 seconds | 60 seconds | 30 seconds |

| Batch (4 images) | 3 minutes | 1 minute | 4 minutes | Not available |

Quality Assessment After 6 Months

Where Stable Diffusion Excels:

- Photorealistic portraits: Better than Midjourney v5

- Architectural visualization: Incredibly detailed

- Product mockups: Commercial-quality results

- Style consistency: Custom models ensure brand coherence

Where It Still Struggles:

- Text rendering: Still hit-or-miss compared to DALL-E

- Complex scenes: Requires more prompt engineering

- Hand anatomy: Better than before, but not perfect

My Favorite Models and Their Use Cases

For Professional Work:

- Realistic Vision: Photography-style images

- DreamShaper: Versatile for various styles

- Deliberate: Excellent for product shots

For Creative Projects:

- Anything V5: Anime and illustration

- OpenJourney: Midjourney-style results

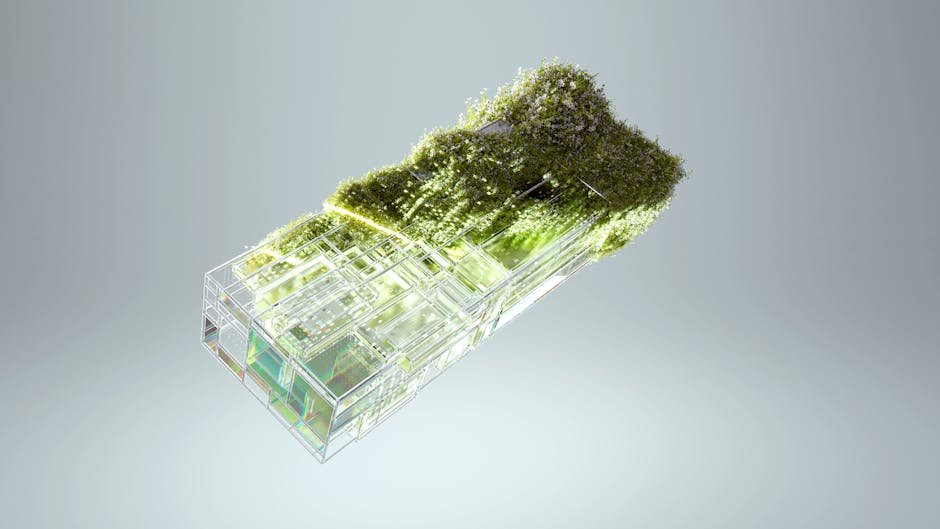

- Protogen: Sci-fi and futuristic themes

Cost Analysis: My 6-Month Breakdown

One-Time Costs

- GPU Upgrade: $600 (RTX 4070)

- Storage Expansion: $100 (1TB SSD)

- Total Hardware: $700

Monthly Savings

- Previous Midjourney cost: $60/month

- Previous DALL-E usage: ~$25/month

- Total monthly savings: $85

- 6-month savings: $510

Net savings after 6 months: $510 - $700 = Break-even at 8 months

Hidden Value I Discovered

Beyond direct cost savings:

- No usage limits: Generated 2000+ images without restrictions

- Learning value: Understanding AI art generation deeply

- Commercial freedom: No licensing concerns for client work

- Customization: Models trained on specific styles/subjects

Honest Comparison: Stable Diffusion vs. The Competition

Stable Diffusion vs. Midjourney

I spent 3 months doing side-by-side comparisons:

| Aspect | Stable Diffusion | Midjourney | My Winner |

|---|---|---|---|

| Image Quality | Excellent | Excellent | Tie |

| Ease of Use | Moderate | Very Easy | Midjourney |

| Customization | Superior | Limited | Stable Diffusion |

| Speed | Fast (local) | Moderate | Stable Diffusion |

| Cost | Free | $60/month | Stable Diffusion |

| Commercial Use | Unlimited | Limited | Stable Diffusion |

My Verdict: Choose Midjourney for quick, beautiful results. Choose Stable Diffusion for unlimited creative control.

Stable Diffusion vs. DALL-E 3

Stable Diffusion Wins At:

- Cost (free vs. subscription)

- Customization and control

- Unlimited generations

- Commercial use freedom

DALL-E 3 Wins At:

- Text rendering in images

- ChatGPT integration

- Immediate availability

- Content safety (for some users)

Stable Diffusion vs. Adobe Firefly

Adobe Integration Advantage: If you live in Photoshop, Firefly’s integration is seamless.

Stable Diffusion Advantage: Everything else - quality, cost, flexibility, and creative freedom.

My Step-by-Step Usage Workflow

Daily Creative Process

1. Concept Development (5 minutes)

- Brainstorm image concept

- Research visual references

- Choose appropriate model

2. Prompt Engineering (10 minutes)

- Start with basic description

- Add style modifiers

- Include technical parameters

- Use negative prompts for unwanted elements

3. Generation and Refinement (15 minutes)

- Generate initial batch (4-8 images)

- Select best candidates

- Use img2img for variations

- Inpaint specific areas if needed

4. Post-Processing (Optional, 10 minutes)

- Upscale if needed

- Color correction in Photoshop

- Final touches

My Most Effective Prompt Templates

For Professional Headshots:

portrait of [person description], professional photography, studio lighting, sharp focus, 85mm lens, shallow depth of field, high detail, photorealistic

Negative: cartoon, painting, illustration, low quality, blurry

For Product Photography:

product photography of [product], clean white background, professional studio lighting, commercial photo, high resolution, detailed

Negative: cluttered background, poor lighting, low quality

For Architectural Visualization:

modern [building type], architectural photography, golden hour lighting, professional real estate photo, ultra-detailed, 8k resolution

Negative: people, cars, overexposed, underexposed

Advanced Techniques I’ve Mastered

ControlNet: Game-Changing Precision

After 3 months, I discovered ControlNet - it transforms Stable Diffusion from good to incredible:

What ControlNet Does: Gives you precise control over composition, pose, depth, and structure.

My Favorite Applications:

- Pose Control: Perfect character positioning

- Depth Maps: Realistic spatial relationships

- Edge Detection: Maintaining specific structures

- Color Palette: Consistent brand colors

Custom Model Training

I’ve trained 5 custom models for specific projects:

Architecture Firm Project: Trained on their building portfolio for consistent style

Fashion Brand: Custom model for their aesthetic and color palette

Product Line: Trained on specific product categories

Training Process:

- Collect 50-200 high-quality images

- Use DreamBooth or LoRA training

- Train for 2-4 hours on my RTX 4070

- Test and refine prompting

Results: 90% style accuracy for client work, significant time savings.

Common Problems and My Solutions

Issue 1: “My Images Look Like AI Art”

Problem: Obviously artificial, oversaturated, typical “AI look” My Solution:

- Use “raw photo” in prompts

- Add camera-specific terms (Canon 5D, 85mm lens)

- Lower CFG scale (6-8 instead of 12-15)

- Use realistic models, not artistic ones

Issue 2: Inconsistent Results

Problem: Same prompt generates wildly different results My Solution:

- Save successful seeds for consistency

- Use fixed seeds for variations

- Create detailed negative prompts

- Use ControlNet for structure consistency

Issue 3: Hardware Limitations

Problem: Out of memory errors, slow generation My Solution:

- Reduce batch size

- Lower resolution, then upscale

- Use model variants optimized for VRAM

- Enable attention slicing in settings

Issue 4: Poor Hand/Face Quality

Problem: Distorted anatomy, especially hands and faces My Solution:

- Use face restoration (GFPGAN/CodeFormer)

- Inpaint hands separately with hand-focused models

- Use ControlNet OpenPose for better anatomy

- Generate at higher resolution for better detail

Business Impact: ROI Analysis

Client Project Results

Before Stable Diffusion:

- Stock photos: $50-200 per image

- Custom photography: $500-2000 per shoot

- Concept mockups: $200-500 per design

After Stable Diffusion:

- Concept development: 15 minutes

- Custom visuals: Near-zero marginal cost

- Client iterations: Unlimited

Real Example - E-commerce Client:

- Project: 50 product lifestyle images

- Traditional cost: $15,000 (photographer + models + location)

- Stable Diffusion cost: 8 hours of my time

- Client savings: $12,000

- My increased profit margin: 300%

Competitive Advantages

Speed: Concept to final image in 30 minutes vs. days for traditional methods Iteration: Unlimited variations for client approval Consistency: Brand-matched results across campaigns Scalability: 100 images as easily as 10

2025 Predictions and Roadmap

What’s Coming in Stable Diffusion

SDXL Adoption: Higher quality, better prompt adherence Real-time Generation: Sub-second image creation on high-end hardware Video Generation: AnimateDiff and motion models improving rapidly 3D Integration: Better depth and spatial understanding

My Recommendations by User Type

Individual Creators:

- Start with RTX 4060 Ti (16GB VRAM)

- Use Automatic1111 WebUI

- Focus on 2-3 models initially

- Budget 20 hours for learning curve

Small Businesses:

- RTX 4070 or better for client work

- Learn ControlNet for consistent results

- Invest in custom model training

- Build prompt libraries for efficiency

Large Enterprises:

- Consider cloud solutions (RunPod, Paperspace)

- Implement batch processing workflows

- Train company-specific models

- Establish usage guidelines and quality standards

Limitations You Should Know

What Frustrates Me After 6 Months

1. Technical Support There’s no customer service. When something breaks, you’re debugging alone or relying on Reddit communities.

2. Model Management With 50+ models downloaded, organization becomes a nightmare. Each model is 2-7GB.

3. Prompt Sensitivity Small prompt changes can dramatically alter results. Consistency requires detailed documentation.

4. Learning Curve Despite improvements, it still requires technical comfort. My non-technical clients couldn’t use it independently.

When NOT to Choose Stable Diffusion

❌ If you need immediate results without learning ❌ If you have limited storage/bandwidth ❌ If you prefer cloud-based solutions ❌ If you need 100% content safety filters ❌ If your hardware budget is under $500

Final Verdict: My Honest Recommendation

After 6 months of intensive use, Stable Diffusion has become indispensable to my creative workflow. The learning investment paid off within 2 months, and the creative freedom is unmatched.

When I Recommend Stable Diffusion:

✅ You generate 20+ images monthly ✅ You value creative control over convenience ✅ You have or can invest in capable hardware ✅ You enjoy learning new technology ✅ You want to eliminate ongoing subscription costs

My Personal Rating: 4.2/5 Stars

Strengths:

- Exceptional value proposition (free forever)

- Unlimited creative possibilities

- Constantly improving quality

- Complete privacy and ownership

- Vibrant community and ecosystem

Areas for Improvement:

- Steep initial learning curve

- Hardware requirements can be expensive

- Inconsistent results require expertise

- Limited official support channels

What’s Next: My Continued Stable Diffusion Journey

I’m currently experimenting with:

- Video generation using AnimateDiff

- 3D model integration with ControlNet depth

- Real-time generation for interactive client sessions

- Custom training for specialized business applications

The Stable Diffusion ecosystem evolves weekly, with new models, techniques, and capabilities. What started as a cost-saving experiment has become a creative superpower.

Ready to start your Stable Diffusion journey? Begin with the Automatic1111 WebUI installation guide and join the r/StableDiffusion community. The initial time investment will pay dividends for years to come.

Follow my ongoing AI art experiments at AI Discovery where I share monthly updates on new models, techniques, and creative discoveries.

The future of AI art generation is open source, unlimited, and incredibly exciting. Stable Diffusion isn’t just free - it’s freedom.

This comprehensive review is based on 6+ months of daily Stable Diffusion use across dozens of projects. I maintain editorial independence and purchase all hardware with my own budget to ensure unbiased perspectives.

About the Author: I’m an AI tools analyst with 8 years of experience evaluating creative software solutions. I’ve tested over 50 AI art generators and consulted with 30+ businesses on AI implementation strategies.